Ref. Ares(2020)2836155 - 02/06/2020

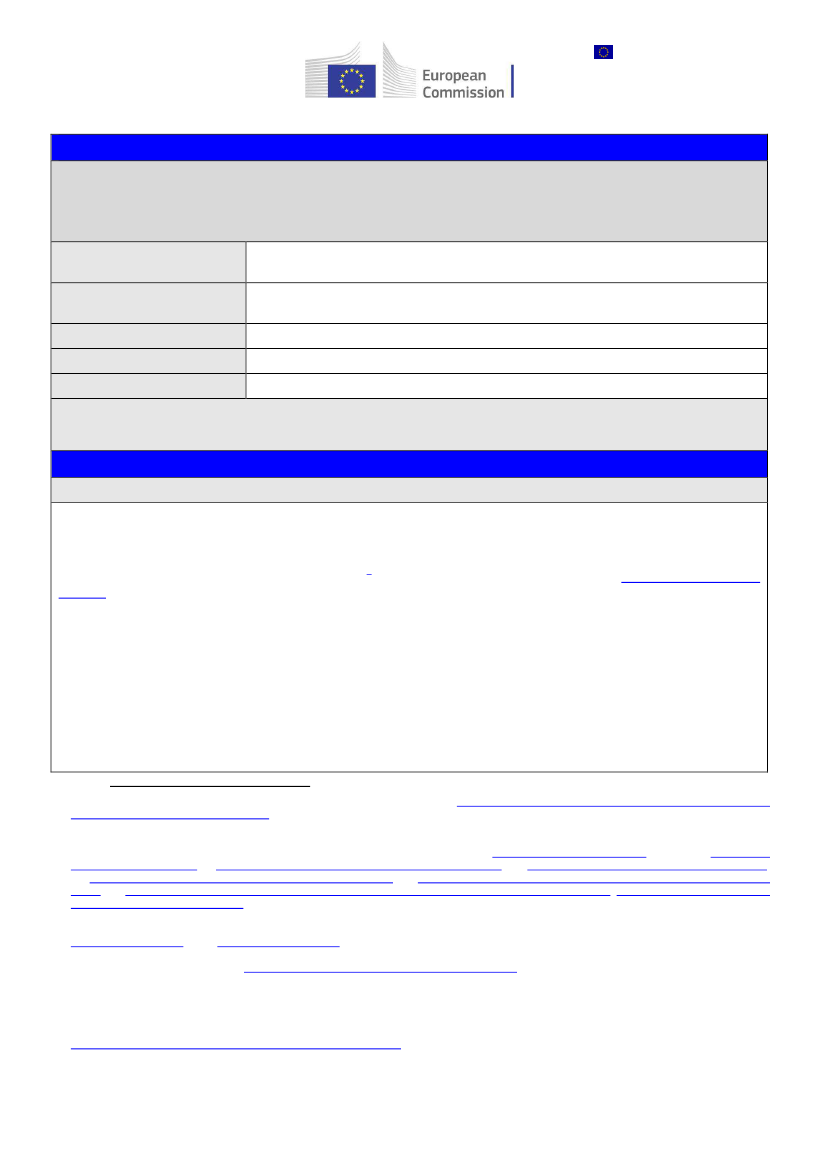

COMBINED EVALUATION ROADMAP/INCEPTION IMPACT ASSESSMENT

This combined evaluation roadmap/Inception Impact Assessment aims to inform citizens and stakeholders about the

Commission's work in order to allow them to provide feedback on the intended initiative and to participate effectively in future

consultation activities. Citizens and stakeholders are, in particular, invited to provide views on the Commission's understanding

of the current situation, problem and possible solutions and to make available any relevant information that they may have,

including on possible impacts of the different options.

T

ITLE OF THE INITIATIVE

L

EAD

DG

–

RESPONSIBLE UNIT

–

AP N

UMBER

L

IKELY

T

YPE OF INITIATIVE

I

NDICATIVE

P

LANNING

A

DDITIONAL

I

NFORMATION

Digital Services Act package: deepening the Internal Market and clarifying

responsibilities for digital services

CONNECT F.2

Legislative instrument

Q4 2020

-

This combined roadmap/Inception Impact Assessment is provided for information purposes only. It does not

prejudge the final decision of the Commission on whether this initiative will be pursued or on its final content. All

elements of the initiative described by this document, including its timing, are subject to change.

A. Context, Evaluation, Problem definition and Subsidiarity Check

Context

The Commission announced that it intends to propose new and revised rules to deepen the Internal Market for

Digital Services, by increasing and harmonising the responsibilities and obligations of digital services and, in

particular, online platforms and reinforce the oversight and supervision of digital services in the EU.

The horizontal legal framework for digital services is unchanged since the adoption of the

e-Commerce Directive

in 2000.

The Directive harmonised the basic principles allowing the cross-border provision of services and has

been a foundational cornerstone for regulating digital services in the EU. In addition, several measures taken at

3

4

5

EU level address, through both legislative instruments and non-binding ones and voluntary cooperation,

targeted issues related to specific illegal or harmful activities conducted online by users’ of digital services and

online platforms in particular.

Technologies, business models and societal challenges are evolving constantly. The wider spectrum of digital

services is the backbone of an increasingly digitised world. This spectrum incorporates a wider range of services,

including cloud infrastructure or content distribution networks. Online platforms like search engines, market

places, social networks, or media-sharing platforms intermediate a wide spectrum of activities and play a

particularly important role in how citizens communicate, share and consume information, and how businesses

trade online, and which products and services are offered to consumers. Online advertising and recommender

1

1

2

2

3

4

5

Digital Strategy “Shaping Europe’s Digital Future” of 19 February, 2020

https://ec.europa.eu/info/sites/info/files/communication-shaping-

europes-digital-future-feb2020_en_4.pdf

The term ‘digital service’ is used interchangeably here with ‘information society service’, defined as ‘any service normally

provided for

remuneration,

at a distance, by electronic means and at the individual request of a recipient of services’ (Directive (EU) 2015/1535)

Legislation addressing specific types of illegal goods and illegal content includes: the

Market Surveillance Regulation,

the revised

audio-visual

media services directive,

the

directive on the enforcement of intellectual property rights,

the

directive on copyright in the digital single market,

the

regulation on market surveillance and compliance of products,

the

proposed regulation on preventing the dissemination of terrorist content

online,

the

directive on combatting the sexual abuse and sexual exploitation of children and child pornography, the regulation on the marketing

and use of explosives precursors

etc. The Directive on better enforcement and modernisation of EU consumer protection rules added

transparency requirements for online marketplaces vis-à-vis consumers which should become applicable in May 2022.

The Commission has also set general guidelines to online platforms and Member States for tackling illegal content online through a

Communication (2017)

and a

Recommendation (2018)

e.g. the EU Internet Forum against terrorist propaganda online, the Code of Conduct on countering illegal hate speech online, the Alliance to

better protect minors online under

the European Strategy for a better internet for children

and the WePROTECT global alliance to end child

sexual exploitation online, the Joint Action of the consumer protection cooperation network authorities, Memorandum of understanding against

counterfeit goods, the Online Advertising and IPR Memorandum of Understanding, the Safety Pledge to improve the safety of products sold

online etc. In the framework of the Consumer Protection Cooperation Regulation (CPC), the consumer protection authorities have also taken

several coordinated actions to ensure that various platforms (e.g travel booking operators, social media, online gaming platforms, web shops)

conform with consumer protection law in the EU. A package of measures was also adopted to secure free and fair elections -

https://ec.europa.eu/commission/presscorner/detail/en/IP_18_5681